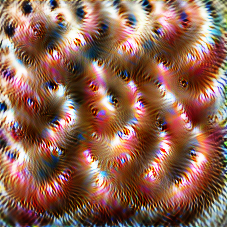

Descript's Podcast Studio

The all-new Descript podcast studio. (Descript)

Descript launched their podcast studio app. As I wrote in DT #18, Descript is a great example of a productized AI company:

Descript takes an audio file (like a podcast or conference talk recording) as input and transcribes it using machine learning. Then, it lets you edit the transcript and audio in synchrony, automatically moving audio clips around as you cut, paste, and shuffle around bits of text.

The team has now launched a multitrack podcast production app using this same technology. As they put it, it’s “the version of Descript we’ve dreamed of since conceiving of the company.” The podcast studio allows you to edit multiple speakers’ audio tracks by editing the transcribed text of what they said; Descript takes care of splicing and syncing all the audio.

It also comes with some crazy new (beta) functionality called Overdub. The feature lets you replace a few words of a transcript and then uses your newly inserted text to generate an audio version of what you typed in your own voice.

Sounds amazing! But also dangerous—what if someone has a recording of your voice? Can they just make a convincing audio clip of you saying whatever they want? Nope. Lyrebird, the team behind the feature, has built in safeguards to prevent that from happening:

Invariably, to first experience Overdub is to experience wunderschrecken—a simultaneous feeling of wonder and dread. Rest assured, you can only use Overdub on your own voice. We built this feature to save you the tedium of re-recording/splicing time every time you make an editorial change, not as a way make deep fakes.

The Lyrebird team deserves credit for figuring this out — in order to train a voice model, you need to record yourself speaking randomly generated sentences, preventing others from using pre-existing recordings to create a model of your voice.

Read all about the new podcast studio and Overdub in Descript CEO Andrew Mason’s Medium post: Introducing Descript Podcast Studio & Overdub.

Papers with Code sotabench

The sotabench homepage. (sotabench)

The team behind Papers with Code has launched sotabench. The name derives from “state of the art (sota)” + “benchmark”, and its mission is precisely that: to benchmark every open source model —for free!

This is super cool. A researcher just needs to implement a small Python file that specifies how to run their model on some given test data. They can then submit their repository to sotabench, which tracks it and runs the model on standardized test data for every commit to the master branch. This way, it independently keeps track of whether models achieve the performance claimed by the authors (within some benchmark-specific error range).

The project is run by Atlas ML, a company whose mission is to “advance open source deep learning” (emphasis mine).

We believe the software of the future should be accessible to everyone, not just large technology companies. We are realising this future by building breakthrough tooling that allows the world to build and collaborate on ambitious deep learning projects.

Atlas ML was co-founded by Robert Stojnic, one of the first Wikipedia engineers. It’s therefore not surprising that the team’s main objective is to push the open and collaborative values that also drive Wikipedia. The meta dataset resulting from sotabench will also surely lead to lots of interesting research on reproducibility and model characteristics vs. performance. Check out the project at sotabench.com.