FirefliesAI: meeting audio to searchable notes

Fireflies.ai turns meetings into notes.

Fireflies.ai records and transcribes meetings, and automatically turns them into searchable, collaborative notes. The startup’s virtual assistant, adorably named Fred, hooks into Google Calendar so that it can automatically join an organization’s Zoom, Meet or Skype calls. As it listens in, it extracts useful notes and information which it can forward to appropriate people in the organization through integrations like Slack and Salesforce. Zach Winn for MIT News:

“[Fred] is giving you perfect memory,” says [Sam] Udotong, who serves as Firelies’ chief technology officer. “The dream is for everyone to have perfect recall and make all their decisions based on the right information. So being able to search back to exact points in conversation and remember that is powerful. People have told us it makes them look smarter in front of clients.”

As someone who externalizes almost everything I need to remember into an (arguably overly) elaborate system of notes, calendars and to-do apps, I almost feel like this pitch is aimed directly at me. I haven’t had a chance to try it out yet, but I’m hoping to give it a shot on my next lunchclub.ai call (if my match is up for it, of course).

Fireflies is not alone, though. It looks like this is becoming an competitive space in productized AI, with Descript (DT #18, #24), Microsoft’s Project Denmark (#23), and Otter.ai (#40) all currently working on AI-enabled smart transcription and editing of long-form audio data. Exciting times!

Radiant Earth Crop Detection in Africa challenge

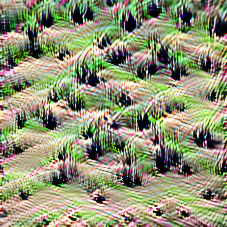

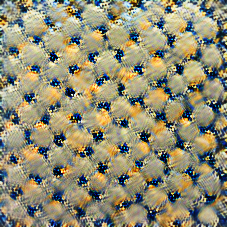

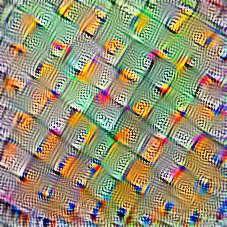

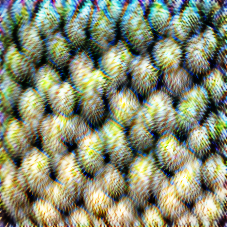

“Sample fields (color coded with their crop class) overlayed on Google basemap from Western Kenya.” (Radiant Earth)

The Radiant Earth Foundation announced the winners of their Crop Detection in Africa challenge . The competition was hosted on Zindi, a platform that connects African data scientists to organizations with “the world’s most pressing challenges”—similar to Kaggle. Detecting crops from satellite imagery comes with extra challenges in Africa due to limited training data and the small size of farms.

A total of 440 data scientists across the world participated in building a machine learning model for classifying crop types in farms across Western Kenya using training data hosted on Radiant MLHub. The training data contained crop types for a total of more than 4,000 fields (3,286 in the training and 1,402 in the testing datasets). Seven different crop classes were included in the dataset, including: 1) Maize, 2) Cassava, 3) Common Bean, 4) Maize & Common Bean (intercropping), 5) Maize & Cassava (intercropping), 6) Maize & Soybean (intercropping), 7) Cassava & Common Bean (intercropping). Two major challenges with this dataset were class imbalance and the intercropping classes that are a common pattern in smallholder farms in Africa.

As climate change will make farming more difficult in many regions across the world, this type of work is vital for protecting food production capacities. Knowing what is being planted where is an important first step in this process. Last year I covered the AI Sowing App from India (DT #20), another climate resilience project that helps farmers decide when to plant which crop using weather and climate data; better data on crop types and locations can certainly help initiatives like that as well.

Distill: Exploring Bayesian Optimization

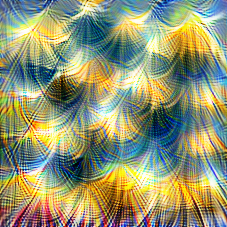

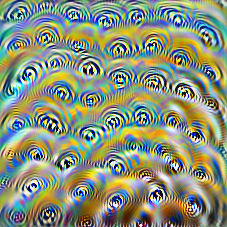

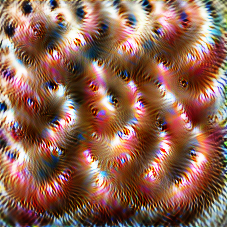

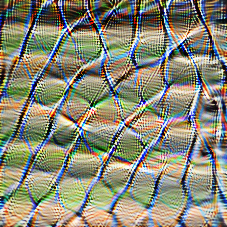

Bayesian optimization of finding gold along a line, using the probability of improvement (PI) acquisition function. (Agnihotri and Batra, 2020.)

Apoorv Agnihotri and Nipun Batra wrote an article Exploring Bayesian Optimization for Distill. This technique is used in hyperparameter optimization, where evaluating any one point—like the combination of a learning rate, a weight decay factor, and a data augmentation setting—is expensive: you need to train your entire model to know how well the hyperparameters performed.

This is where Bayesian optimization comes in. It centers around answering the question “Based on what we know so far, what point should we evaluate next?” The process uses acquisition functions to trade off exploitation (looking at points in the hyperparameter space that we think are likely to be good) with exploration (looking at points we’re very uncertain about). Given an appropriate acquisition function and priors, it can help find a good point in the space in surprisingly few iterations.

Bayesian optimization was one of the tougher subjects to wrap my head around in graduate school, so I was very excited to see it get the Distill treatment. Agnihotri and Batra explain the process through an analogy of picking the best places to dig for gold which, incidentally, was also one of its first real-world applications in the 1950s! You can read the full explainer here; also check out DragonFly and BoTorch, two tools for automated Bayesian optimization from my ML resources list.

Pinterest's AI-powered automatic board groups

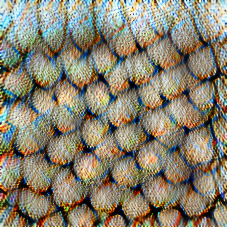

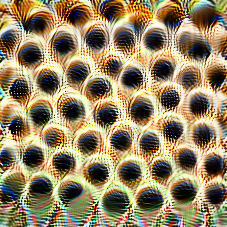

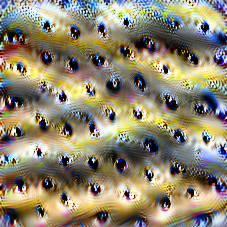

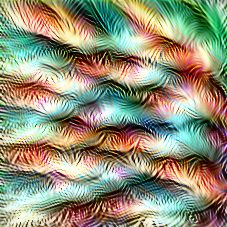

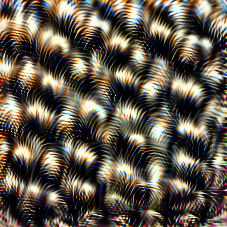

Pinterest’s UX flow for ML-based grouping within boards. (Pinterest Engineering Blog.)

Pinterest has added new AI-powered functionality for grouping images and other pins on a board. The social media platform is mostly centered around finding images and collecting (pinning) them on boards. After working on a board for a while, though, some users may pin so much that they no longer see the forest for the trees. That’s where this new feature comes in:

For example, maybe a Pinner is new to cooking but has been saving hundreds of recipe Pins. With this new tool, Pinterest may suggest board sections like “veggie meals” and “appetizers” to help the Pinner organize their board into a more actionable meal plan.

Here’s how it works:

- When a user views a board that has a potential grouping, a suggestion pops up showing the suggested group and a few sample pins.

- If the user taps it, the suggestion expands into a view with all the suggested pins, where she can deselect any pins she does not want to add to the group. (Which I’m sure is very valuable training data!)

- The user can edit the name for the section, and then it gets added to her board.

Coming up with potential groupings is a three-step process. First, a graph convolutional network called PinSage computes an embedding based on text associated with the pin, visual features extracted from the image, and the graph structure. Then the Ward clustering algorithm (chosen because it does not require a predefined number of clusters) generates potential groups. Finally, a filtered count of common annotations for pins in the group decides the proposed group name.

Pinterest has really been on a roll lately with adding AI-powered features to its apps, including visual search (DT #23) and AR try-on for shopping (DT #33). This post by Dana Yakoobinsky and Dafang He on the company’s engineering blog has the full details on their implementation of this latest feature, as well as some future plans to expand it.

pixel-me.tokyo: GAN for 8-bit portraits

Pixel-me at graduation.

Japanese developer Sato released pixel-me.tokyo, a website that generates an 8-bit style portrait based on user-submitted photos. It works both on human faces and pets. Sato also previously made AI Gahaku (“AI master painter”), which creates portraits in the style of classical painters. He built both projects using pix2pix, a conditional generative adversarial network (cGAN) that’s commonly used for AI art projects. Google developer advocate Kaz Sato (“similar names, but we are not the same person”) wrote up a nice piece for the Google Cloud blog about Sato’s process the projects.