3D photography using depth inpainting

Layered depth inpainting. (Shih et al., 2020)

Here’s another cool AI art piece that can’t be done justice using just the static screenshot above: Shih et al. (2020) published 3D Photography using Context-aware Layered Depth Inpainting at this year’s CVPR conference. Here’s what that means:

We propose a method for converting a single RGB-D input image into a 3D photo, i.e., a multi-layer representation for novel view synthesis that contains hallucinated color and depth structures in regions occluded in the original view.

Based on a single image (plus depth information), they can generate a 2.5-dimensional representation, realistically re-rendering the scene from slightly different perspectives from which it was originally taken. Contrast that with recent work on neural radiance fields, which requires on the order of 20 - 50 images to work (see DT #36).

Shih et al. set up a website with some fancy demos, which is definitely worth a look; see these gifs on Twitter too. One of the authors also works at Facebook, so I wonder if we’ll one day see Instagram filters with this effect—or if it’ll be a part of Facebook’s virtual reality ambitions. Since the next generation of iPhones will likely have a depth sensor on the back too, I expect we’ll see a lot of this 2.5D photography stuff in the coming years.

Distill: Early Vision in CNNs

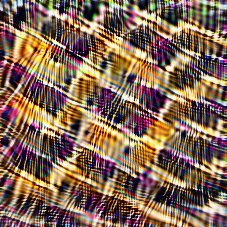

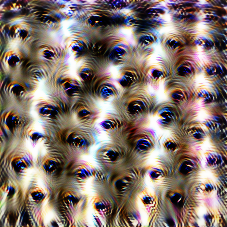

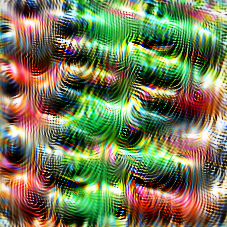

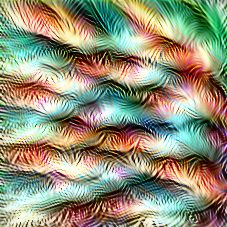

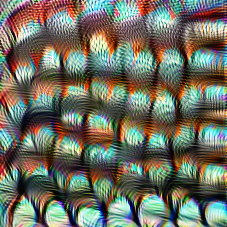

The largest neuron groups in the mixed3a layer of InceptionV1. (Olah et al., 2020)

Chris Olah and his OpenAI collaborators published a new Distill article: An Overview of Early Vision in InceptionV1 . This work is part of Distill’s Circuits thread, which aims to understand how convolutional neural networks work by investigating individual features and how they interact through the formation of logical circuits (see DT #35). In this new article, Olah et al. explore the first five layers of Google’s InceptionV1 network:

Over the course of these layers, we see the network go from raw pixels up to sophisticated boundary detection, basic shape detection (eg. curves, circles, spirals, triangles), eye detectors, and even crude detectors for very small heads. Along the way, we see a variety of interesting intermediate features, including Complex Gabor detectors (similar to some classic “complex cells” of neuroscience), black and white vs color detectors, and small circle formation from curves.

Each of these five layers contains dozens to hundreds of features (a.k.a.

channels or filters) that the authors categorize into human-understandable groups, which consist of features that detect similar things for inputs with slightly different orientations, frequencies, or colors.

This goes from conv2d0, the first layer where 85% of filters fall into two simple categories (detectors for lines and for contrasting colors, in various orientations), all the way up to mixed3b, the fifth layer where there are over a dozen complex categories (detectors for small heads, for circles/loops, and much more).

We’ve known that there are line detectors in early network layers for a long time, but this detailed taxonomy of later-layer features is novel—and it must’ve been an enormous amount of work to create.

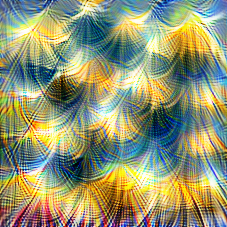

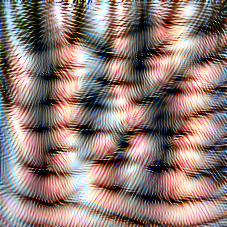

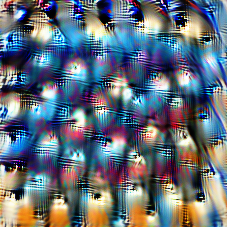

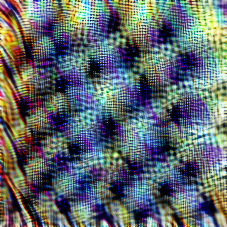

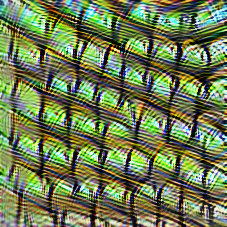

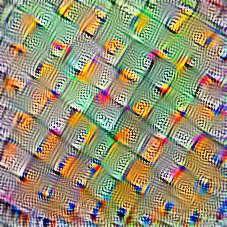

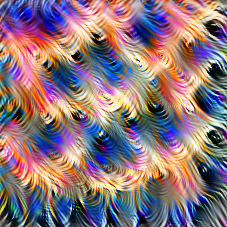

A cicuits-based visualization of the black & white detector neuron group in layer mixed3a of InceptionV1. (Olah et al., 2020)

For a few of the categories, like black & white and small circle detectors in mixed3a, and boundary and fur detectors in mixed3b, the article also investigates the “circuits” that formed them.

Such circuits show how strongly the presence of a feature in the input positively or negatively influences (“excites” or “inhibits”) different regions of the current feature.

One of the most interesting aspects of this research is that some of these circuits—which were learned by the network, not explicitly programmed!—are super intuitive once you think about them for a bit.

The black & white detector above, for example, consists mostly of negative weights that inhibit colorful input features: the more color features in the input, the less likely it is to be black & white.

The simplicity of many of these circuits suggests, to me at least, that Olah et al. are currently exploring one of the most promising paths in AI explainability research. (Although there is an alternate possibility, as pointed out by the authors: that they’ve found a “taxonomy that might be helpful to humans but [that] is ultimately somewhat arbitrary.”)

Anyway, An Overview of Early Vision in InceptionV1 is one of the most fascinating machine learning papers I’ve read in a long time, and I spent a solid hour zooming in on different parts of the taxonomy.

The groups for layer mixed3a are probably my favorite.

I’m also curious about how much these early-layer neuron groups generalize to other vision architectures and types of networks—to what extent, for example, do these same neuron categories show up in the first layers of binarized neural networks?

If you read the article and have more thoughts about it that I didn’t cover here, I’d love to hear them. :)

Rosebud AI's GAN photo models

None of these models exist. (Rosebud AI)

Rosebud AI uses generative adversarial networks (GANs) to synthesize photos of fake people for ads. We’ve of course seen a lot of GAN face generation in the past (see DT #6, #8, #23), but this is one of the first startups I’ve come across that’s building a product around it. Their pitch to advertisers is simple: take photos from your previous photoshoots, and we’ll automatically swap out the model’s face with one better suited to the demographic you’re targeting. The new face can either be GAN-generated or licensed from real models on the generative.photos platform. But either way, Rosebud AI’s software takes care of inserting the face in a natural-looking way.

This raises some obvious questions: is it OK to advertise using nonexistent people? Do you need models’ explicit consent to reuse their body with a new face? How does copyright work when your model is half real, half generated? I’m sure Rosebud AI’s founders spend a lot of time thinking about these questions; and as they do, you can follow their along with their thoughts on Twitter and Instagram.

Google releases TensorFlow Quantum

“A high-level abstract overview of the computational steps involved in the end-to-end pipeline for inference and training of a hybrid quantum-classical discriminative model for quantum data in TFQ. “

Google has released TensorFlow Quantum (TFQ), its open-source library for training quantum machine learning models. The package integrates TensorFlow with Cirq, Google’s library for working with Noisy Intermediate Scale Quantum (NISQ) computers (scale of ~50 - 100 qubits). Users can define a quantum dataset and model in Cirq and then use TFQ to evaluate it and extract a tensor representation of the resulting quantum states. For now Cirq computes these representations (samples or averages of the quantum state) using millions of simulation runs, but in the future it will be able to get them from real NISQ processors. The representations feed into a classical TensorFlow model and can be used to compute its loss. Finally, a gradient descent step updates the parameters of both the quantum and classical models.

A key feature of TensorFlow Quantum is the ability to simultaneously train and execute many quantum circuits. This is achieved by TensorFlow’s ability to parallelize computation across a cluster of computers, and the ability to simulate relatively large quantum circuits on multi-core computers.

TensorFlow Quantum is a collaboration with the University of Waterloo, (Google/Alphabet) X, and Volkswagen, which aims to use it for materials (battery) research. Other applications of quantum ML models include medicine, sensing, and communications.

These are definitely still very much the early days of the quantum ML field (and of quantum computing in general), but nonetheless it’s exciting to see this amount of software tooling and infrastructure being built up around it. For lots more details and links to sample code and notebooks, check out the Google AI blog post by Alan Ho and Masoud Mohseni here: Announcing TensorFlow Quantum: An Open Source Library for Quantum Machine Learning.

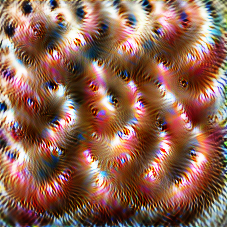

Neural radiance fields for view synthesis: NeRF

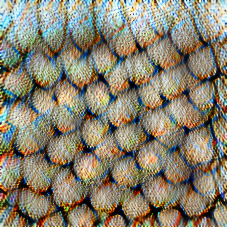

Novel views generated from pictures of a scene.

Representing Scenes as Neural Radiance Fields for View Synthesis, or NeRF, is some very cool new research from researchers at UC Berkeley. Using an input of 20-50 images of a scene taken at slightly different viewpoints, they are able to encode the scene into a fully-connected (non-convolutional) neural network that can then generate new viewpoints of the scene. It’s hard to show this in static images like the ones I embedded above, so I highly recommend you check out the excellent webpage for the research and the accompanying video.