Software 2.0 at Plumerai

The stacked layers of Plumerai’s Larq ecosystem: Larq Compute Engine, Larq, and Larq Zoo.

A few days ago, we published a new blog post about software 2.0 at Plumerai , which touches on some points that are interesting for productized artificial intelligence at large. As a reminder, Plumerai—and my day-to-day research there—is centered around Binarized Neural Networks (BNNs): deep learning models in which weights and activations are not floating-point numbers but can only be -1 or +1. Larq is our ecosystem of open-source packages for BNN development: larq/zoo has pretrained, state-of-the-art models; larq/larq integrates with TensorFlow Keras to provide BNN layers and training tools; and larq/compute-engine is an optimized converter and inference engine for deploying models to mobile and edge devices.

Andrej Karpathy, who was previously at OpenAI and is now developing Tesla’s self-driving software, first wrote about his vision for Software 2.0 back in 2017. I recommend reading the whole essay, but it boils down to the idea that large chunks of currently human-written software will be replaced by learned neural networks—something we already see happening in areas like computer vision, machine translation, and speech recognition/synthesis. Let’s look at two benefits Karpathy notes about this shift that are relevant to our work on Larq.

First, neural networks are agile. Depending on computational requirements—running on a high-power chip vs. an energy-efficient one—deep learning models can be scaled by trading off size for accuracy. Take one of the BNN families in our Zoo package, for example: the XL version of QuickNet achieves 67.0% top-1 ImageNet classification accuracy at a size 0f 6.2mb, but it can also be scaled down to get 58.6% at just 3.2mb. Depending on the power and accuracy requirements of your application, you can swap in one for the other without having to otherwise change your code.

Second, deep learning models constantly get better. If we think of a cool new training trick or other optimization for BNNs, we can push it out in an updated version of the QuickNet family. In fact, that’s exactly what we did last week:

A great example of the power of this integrated approach is the recent addition of one-padding across the Larq stack. Padding with ones instead of zeros simplifies binary convolutions, reducing inference time without degrading accuracy. We not only enabled this in [Larq Compute Engine], but also implemented one-padding in Larq and retrained our QuickNet models to incorporate this feature. All you need to do to get these improvements is update your pip packages.

I’m super excited about the idea of Software 2.0, and—as you can probably tell from the paragraphs above—I’m pumped to be working on it every day at Plumerai, both on the research side (making better models) and the software engineering side (improving Larq). You can read more about our Software 2.0 aspirations in this blog post: The Larq Ecosystem: State-of-the-art binarized neural networks and even faster inference.

Distill: Zoom in on Circuits

“By studying the connections between neurons, we can find meaningful algorithms in the weights of neural networks."

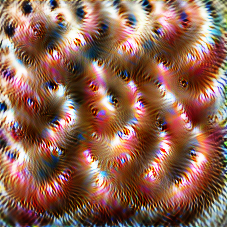

Chris Olah et al. wrote a fascinating new Distill article about “circuits” in convolutional neural networks. The authors aim to reposition the field of AI interpretability as a natural science, like biology and chemistry:

There are two common proposals for dealing with this [lack of shared evaluation measures in the field of interpretability], drawing on the standards of adjacent fields. Some researchers, especially those with a deep learning background, want an “interpretability benchmark” which can evaluate how effective an interpretability method is. Other researchers with an HCI background may wish to evaluate interpretability methods through user studies.

But interpretability could also borrow from a third paradigm: natural science. In this view, neural networks are an object of empirical investigation, perhaps similar to an organism in biology. Such work would try to make empirical claims about a given network, which could be held to the standard of falsifiability.

Olah et al. do exactly this by investigating the Inception v1 network architecture in detail and presenting three speculative claims about how convolutional neural networks work:

- Features are the fundamental unit of neural networks. They correspond to directions. These features can be rigorously studied and understood.

- Features are connected by weights, forming circuits. These circuits can also be rigorously studied and understood.

- Analogous features and circuits form across models and tasks.

For the former two claims, they present substantive evidence: examples of curve detectors, high-low frequency detectors, and pose-invariant dog head detectors for their claim about features; and examples of again curve detectors, oriented dog head detection, and car + dog superposition neurons for the circuits claim.

As always, the article is accompanied by very informative illustrations, and even some interesting tie-backs to the historical invention of microscopes and discovery of cells. I found it a fascinating read, and it made me think about how these findings would look in the context of binarized neural networks. You can read the article by Olah et al. (2020) on Distill: Zoom In: An Introduction to Circuits.

Unscreen by remove.bg

Landing page for unscreen

Unscreen is a new zero-click tool for automatically removing the background from videos. It’s the next project from Kaleido, the company behind remove.bg, which I’ve covered extensively on Dynamically Typed: from their initial free launch (DT #3) and Golden Kitty award (DT #5), to the launch of their paid photoshop plugin (DT #12) and cat support (yes, really: DT #16).

Unscreen is another great example of a highly-targeted, easy-to-use AI product, and I’m excited to see it evolve—probably following a similar path to remove.bg, since they’ve already pre-announced their HD, watermark-free pro plan on the launch site.

BERT in Google Search

Improved natural language understanding in Google Search. (Google)

Google Search now uses the BERT language model to better understand natural language search queries:

This breakthrough was the result of Google research on transformers: models that process words in relation to all the other words in a sentence, rather than one-by-one in order. BERT models can therefore consider the full context of a word by looking at the words that come before and after it—particularly useful for understanding the intent behind search queries.

The query in the screenshots above is a good example of what BERT brings to the table: its understanding of the word “to” between “brazil traveler” and “usa” means that it no longer confuses whether the person is from Brazil and going to the USA or the other way around. Google is even using concepts that BERT learns from English-language web content for other languages, which led to “significant improvements in languages like Korean, Hindi and Portuguese.” Read more in Pandu Nayak’s post for Google’s The Keyword blog: Understanding searches better than ever before.

Chollet's Abstraction and Reasoning Corpus

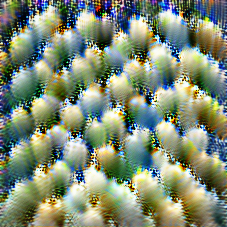

From top to bottom: Chollet’s hierarchy of intelligence, and two sample tasks from ARC. (François Chollet))

Keras creator François Chollet has published his 64-page manifesto on the path “toward more intelligent and human-like” AI in a paper titled The Measure of Intelligence that “formalizes things [he’s] been talking about for the past 10 years.” This is one of the most inspiring papers I’ve read in a long time, and it has many people around the office very excited too. Broadly, Chollet covers three topics: (1) the context and history of evaluating the intelligence of humans and machines; (2) a new perspective of what a framework for evaluating intelligence should be; and (3) the Abstraction and Reasoning Corpus (ARC), his implementation of this framework.

(1) Context and history. In cognitive science, there are are two opposing views of how the human mind works:

One view in which the mind is a relatively static assembly of special-purpose mechanisms developed by evolution, only capable of learning what is it programmed to acquire, and another view in which the mind is a general-purpose “blank slate” capable of turning arbitrary experience into knowledge and skills, and that could be directed at any problem.

Chollet explains that early (symbolic) AI research focused on the former view, creating intricate symbolic representations of problems over which computers could search for solutions, while current (deep learning) AI research focuses on the latter, creating “randomly initialized neural networks that starts blank and that derives its skills from training data.” He argues that neither of these approaches is sufficient for creating human-like intelligence, which, as he introduces through the lense of psychometrics, is mostly characterized by the ability to broadly generalize on top of some low-level core knowledge that all humans are born with.

(2) A new perspective. Chollet presents a new framework that is meant to be an “actionable perspective shift in how we understand and evaluate flexible or general artificial intelligence.” It evaluates these broad cognitive generalization abilities by modelling an intelligent system as something that can output static “skill programs” to achieve some task. The system’s intelligence is then measured by how efficiently it can generate these skills. Formally:

The intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks, with respect to priors, experience, and generalization difficulty.

(3) Abstraction and Reasoning Corpus (ARC). Chollet finally proposes a practical implementation of the framework. An ARC task, as pictured above, consists of several example before and after grids, and one final before grid for which the intelligent system’s generated skill must figure out the correct after grid. Each task is designed so that the average human can solve it quite easily, and so that it depends only on core knowledge (and not learned things like the concept of arrows). Tasks range from simple object counting to more complex things like continuing a line that bounces off edges. There are 400 tasks to train on and 600 tasks to test on, of which 200 are secret and used to evaluate a competition.

I’ve barely scratched the surface of the paper here, and I highly recommend reading it in full and trying out ARC for yourself!

- The Measure of Intelligence on arXiv: Chollet (2019)

- The Abstraction and Reasoning Corpus on GitHub, including a version you can test yourself on: fchollet/ARC

- Chollet’s twitter thread with some more background about how the paper came to be.